I write pieces on sub-sectors and trends within health tech and life sciences. Sign up below to get the latest in your inbox.

The pace of change in life sciences today is staggering. Exciting new types of therapeutic modalities, including cell and gene therapies, CRISPR and mRNA are saving lives and comprise an increasing proportion of R&D pipelines. These new modalities expand the chemical toolbox that scientists and researchers have to probe, understand, and treat diseases. Scientific understanding is reaching new heights with DeepMind’s ability to accurately predict protein structures. And the data to generate further advances is increasingly available. For example, researchers can use the UK Biobank, a detailed decade-plus long genetic and clinical dataset of over 500,000 patients, to understand the genotypic origins of diseases at a population level.

Many parts of the life sciences industry are changing. I’ve written before about how real world data is transforming processes from research to commercialization. And in later pieces I’ll be exploring changes to clinical trials and manufacturing.

But today I want to focus on the new tech stack for Research and Development (R&D) teams. Making these teams more effective and efficient is one of the most important things we can do as a society. After all, these teams have powered incredibly impactful discoveries: a Hepatitis C cure, COVID vaccines, CAR-T cancer drugs that meaningfully extend cancer patients’ lives. And similar approaches are powering compelling advances in agriculture, lab-grown meat and more.

Life sciences R&D is a massive $230B market. But unfortunately R&D efficiency has been steadily declining over the past few decades. For each drug successfully brought to market, pharma companies often spend over $1B. This exponential decrease in productivity is often called Eroom’s law, the inverse of the exponential improvement in semiconductors described by Moore’s law.

A lot will be needed to reverse this decline in efficiency, but a new software and infrastructure stack for these teams seems particularly poised to help.

How R&D Works

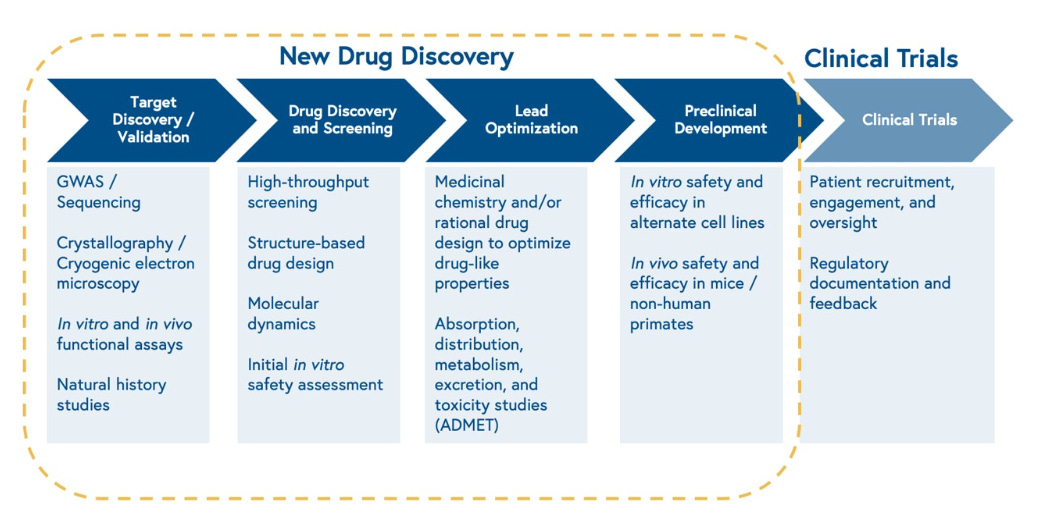

Life sciences R&D is a complex process with key differences across therapeutic modalities whose scope is beyond this piece. But the same basic structure applies.

At its simplest level, scientists are looking for therapies that impact a disease. This usually starts with finding a target (or combination of targets): a protein within the cell that is either associated with a disease or that causes a disease. This often requires deep study and understanding of a disease. Once a target is identified, researchers look for compounds that can impact that target. These compounds take many forms. In the early days of drug development these compounds were chemicals found in nature (sometimes accidentally, like penicillin), and later synthetically manufactured. Today’s drug development includes more biology where cells are changed or “programmed” through precise processes. In the case of CAR-T, a patient’s own T-cells are actually reengineered with a protein that binds to proteins on cancer cells. These biologics include engineered proteins, antibodies and cell and gene therapies.

So how are these compounds found today? The process starts with scientists looking at increasingly large datasets to develop hypotheses for what interventions might be effective in binding and altering the target. They then generate compounds or biological entities against the target. In the case of small molecules, these early experiments often consist of high-throughput simulated or real screens of many different compounds aided by computer based design, computational methods and automated labs. In the case of large molecules, cell, and gene therapies, efficacy is also assessed in vitro (on living cells outside their normal biological environment) with large scale lab automation hardware. The data from these experiments is analyzed to iterate on therapeutic design. This process involves more logistics and steps as the therapeutic being tested has to be biologically engineered, particularly for cell therapies.

Once a promising lead is found, a lot of refining occurs to increase the compound’s selectivity for the target, its ability to move around a body and reach the tissue(s) it needs to, and its efficacy in blocking or changing the target. This occurs both in vitro and in vivo (when the drug is tested in living species such as mice, rats, dogs, and/or non-human primates). ADMET is the acronym used to describe what is assessed at this stage: Absorption, Distribution, Metabolism, Excretion and Toxicity. Usually this process is highly iterative. Computer simulations are also run to further optimize these therapeutics.

This process is highly complex and is part of the reason a drug can take 10-15 years to get to market. After all, the universe of possibilities is endless. The number of possible molecular structures is greater than the number of seconds that have elapsed since the Big Bang. The Bessemer team provided a great overview of the process below:

The labs in which these experiments are run have tons of different pieces of equipment testing different things to understand disease and interventions. There’s flow cytometry devices which measure characteristics of cells and particles, next generation sequencing machines that give precise genetic read-outs, chromatography and Nuclear Magnetic Resonance spectroscopy devices that give a sense of molecular structure. There’s also a whole set of lab automation tools, like liquid handling robots, that help run these experiments faster.

Challenges in R&D

Surprisingly, much of the important cutting-edge research with the processes above until recently still occurred in paper notebooks and Excel spreadsheets. This made it difficult to reproduce and audit a set of experiments. It also made collaboration difficult. A lot of lab work is still to this day not automated. R&D teams still have brilliant PhD scientists spending hours pipetting. A tremendous amount of manual labor is often required in lab work.

When data is recorded, it often lives locally in an instrument specific format and location. With each device having different file formats, trying to transfer and combine these datasets to study them together is quite difficult. Researchers spend 30%–40% of their time searching for, aggregating, and cleansing data. While many industries went to the cloud and adopted leading horizontal solutions, large pharma companies mostly have their own disconnected on-premise solutions. Auditing and reviewing this data is also difficult. Researchers lack tools to understand what data cleaning was done, what algorithm version was used and what was done in tools like R and Python.

Other industries have seen tremendous gains through adopting software optimized for specific parts of processes. While pharma companies often work closely with Contract Research Organizations (CROs) to outsource experiments, they lack software tools to do faster analyses and more effective optimizations throughout the drug development process.

There are good reasons pharma hasn’t yet made the transition. Changing R&D lab processes are incredibly weighty implementations with billions of dollars at stake. And a lot of the horizontal software companies that have changed operations for other industries don’t understand the scientific and regulatory complexities of pharma. Life sciences datasets have wildly different data types and proprietary formats. And researchers are often trained at academic institutions who themselves have not transitioned to the cloud so they learn to work on local machines.

Why is R&D changing now?

In the past couple years, three large trends have helped change this status quo.

1. Much more data is being generated in R&D organizations

The ability to do high throughput genetic sequencing and study new multiomic datasets like proteomics (set of proteins produced by a cell or organism), transcriptomics (set of RNA transcripts in a cell), metabolomics (set of small molecule chemicals in a cell) etc. has all been unlocked in recent years. They provide a much more detailed sense of what’s happening in disease and interventions.

This increased understanding is driven by increased data. New high resolution microscopes generate terabytes of data. Experts predict genomic data will require >100 petabytes of storage a day within the next decade as sequencing costs continue to get cheaper. Scientists now generate 10,000x more data per experiment than they did a decade ago.

At that scale, pen and paper and on premise solutions will struggle even more. And the sheer number of datasets researchers want to combine to analyze will require better tooling.

2. The rise of new therapeutic modalities requires new tools and new roles

Much of drug discovery traditionally revolved around small molecules. The Benchling team provided the helpful example that Lipitor, Pfizer’s incredibly successful cholesterol drug approved in 1997, was 50 atoms while an antibody today is ~25,000 atoms + 20-30 different add-ons. These antibodies require complex operational processes to create as many come from living culture systems. The genetic engineering and cell modification required to create these, working with living sources, involves more complex processes than small molecules. New techniques like CRISPR enable researchers to have more granularity and specificity in manipulating genetic sequences.

The use of these techniques is increasing. Biopharmaceuticals have grown from 15% to 50% of all medicines in pipelines, and biologics now account for 8 of the top 10 best selling drugs. And these modalities continue to evolve; more than half of the biotherapeutics pipeline is filled with innovative modalities including gene therapy, cell therapy, CAR-T, bispecific antibodies, and mRNA treatments.

These new modalities require new workflow tools because their processes are different. The details on how samples are created and experiments are run, are even more crucial when using living systems which are much more sensitive to changes than traditional chemical compounds. It’s increasingly important the notebook used to track experiments neatly syncs with input sources and results data. Pen and paper is no longer an option.

These new modalities also have different looking teams. Small molecule discovery may have only required medicinal chemists but new modalities like RNA development involve biologists, chemists, bioinformaticists and immunologists. While small molecule discovery tools could be optimized for a single user type, new tools are required for others involved in the R&D process.

Both increased data and these new modalities have helped move life sciences companies to the cloud; 71% of them are now prioritizing this.

3. More and different biotech startups

It’s easier to sell new companies that don’t have a software stack yet than rip out software users are accustomed to and companies may have invested billions of dollars in building. Getting a large pharma company off a core workflow or infrastructure tool can be akin to moving a health system off Epic. Having a set of startups to sell into has propelled software and infrastructure companies in other industries. The same should prove true in life sciences. VC funding for early-stage biotech companies increased 4x from 2019 to 2021 and has remained high in current market conditions.

Companies selling new R&D solutions can balance shorter startup sales cycles with longer enterprise sales cycles and these companies can grow with the startups they support. Their customers are more willing to allow them to provide a broader set of services, even with a leaner team. All the while they are building robust capabilities and case studies that should make their product even stronger for the incumbents to which they will eventually sell.

The Market Today

So what’s happening today?

1. A next generation of vertical software tools built for life sciences companies are saving researchers time in their processes.

2. New infrastructure is enabling much more sophisticated and repeatable analyses of data generated in labs.

3. Life sciences companies are increasingly looking to AI startups to help with R&D processes.

What are the different parts of the R&D software stack today?

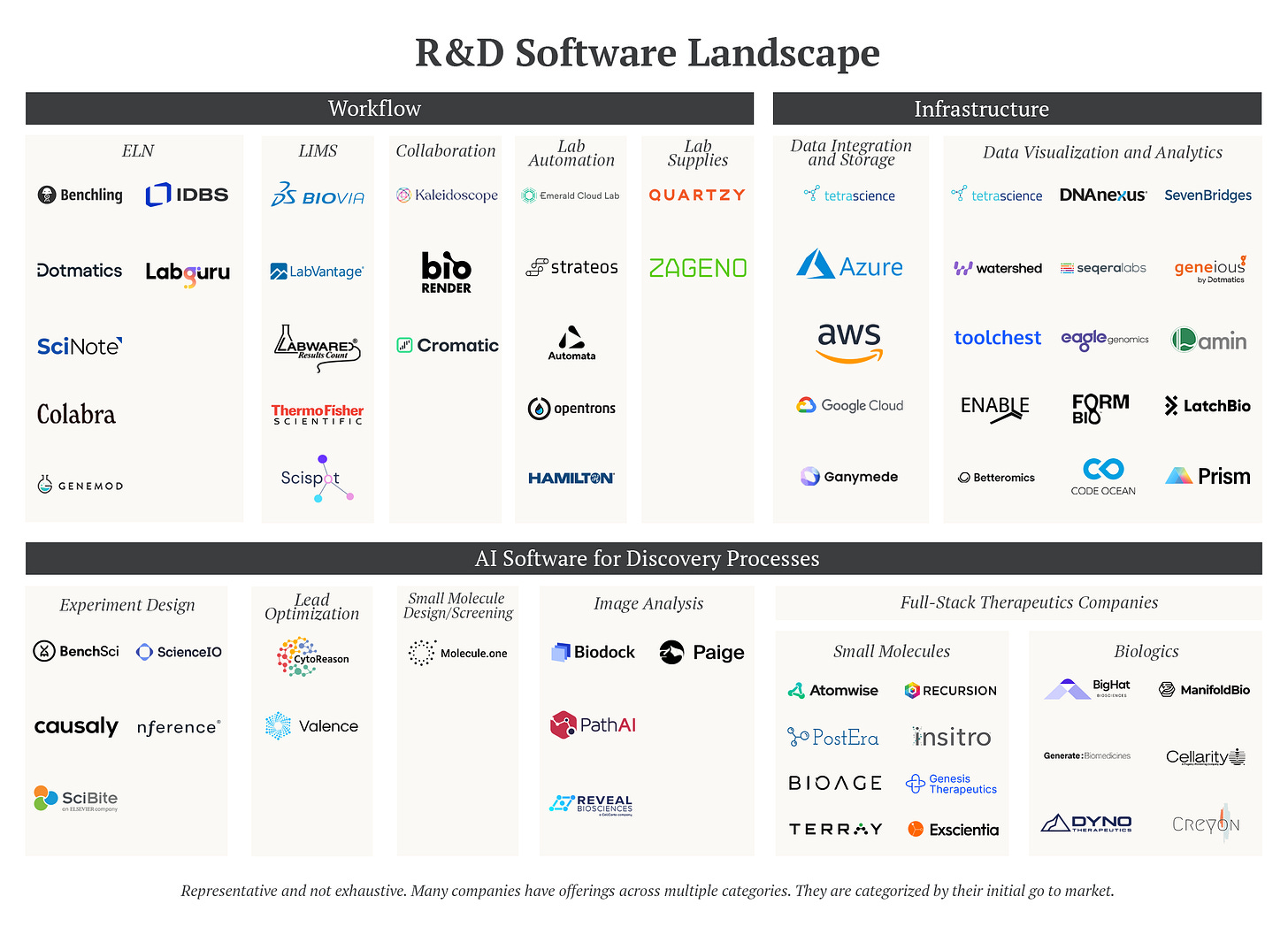

1. Life Sciences Workflow Software

A. Electronic Lab Notebook (ELN) - a software tool scientists use to organize their research around a wide range of experiments. Inputs include what experiments the researcher is going to do, the protocols for the experiment (e.g., how you insert a gene into a bacteria) and documentation of results. This was traditionally done with pen and paper and a lot of the text is unstructured and free-flowing. Benchling has rapidly grown in this space but other major competitors include Dotmatics, SciNote and others.

B. Lab Information Management System (LIMS) - a software tool that tracks the operations of a laboratory including tracking the usage and inventory of samples, reagents and equipment used in a lab and the data output of that equipment. Many ELN companies also provide LIMS services and vice-versa.

C. Collaboration - as R&D teams involve increasingly large teams of lab scientists and computational biologists, new tools are needed for collaboration given the different stack of tools each team uses. Companies like Kaleidoscope help track projects and plan workflows across these disparate tools. Companies like BioRender help improve the way in which scientists communicate with each other through making it easier to create and share detailed professional science figures to discuss experiment design and results. Cromatic is an interesting startup helping life sciences companies better choose and collaborate with their external Contract Research Organizations (CROs).

D. Lab Automation - a new set of companies obviates the need for manual lab work through machine automation (Automata), or conducting full lab work leveraging this automation (Emerald Cloud Lab, Strateos).

E. Lab Supplies - as labs get more complex, managing supplies has become increasingly difficult. Companies like Quartzy and Zageno help life sciences companies manage inventory, supplies and requests.

2. Infrastructure

A. Data integration and storage - data generated locally from equipment is increasingly being stored in cloud warehouses through instrument integrations which it doesn’t make sense for any individual company to build. TetraScience helps integrate the data from this equipment into a centralized data warehouse. Companies are also helping push this data into ELNs, LIMS and better allow data transfer with life sciences companies’ outsourced partners.

B. Data harmonization, visualization and analytics - the bioinformatics market is projected to be an over $5B market in 2026. Working with all of this new data previously required rare employees deep in both biology and software. There simply aren’t enough so today a variety of companies are helping life sciences organizations better work with the data that comes off these devices. This includes normalizing and standardizing data from different instruments, managing versioning and data provenance, automating workflows and orchestrating infrastructure to manage resources and cost. It also includes creating easy to use querying and visualization. In other industries horizontal infrastructure tools have emerged to solve these problems. But biology has unique file types, visualizations, regulations and interactions with physical machines. So a set of companies are emerging enabling no-code, basic SQL workflows that have become common through infrastructure players in other industries. Instrument companies like Illumina and 10x Genomics provide detailed software packages for analytics in their own tools. Others like TetraScience, 7Bridges, Betteromics, DNANexus Eagle Genomics, Enable Medicine, Form Bio, Lamin, LatchBio, Seqera Labs, Toolchest and Watershed are more instrument agnostic with some serving a variety of different pharma data types and other specializing in particular modalities (e.g., cell and gene therapy). These companies also vary in how much flexibility they enable end users (for bioinformaticians to run customized pipelines as experiment types and analyses change) vs. standardized no code workflows (to let academic biologists be able to run quick analyses on raw data).

3. AI for Discovery Processes

A variety of companies are starting to offer products that augment the speed and quality of what R&D teams have traditionally done in-house using AI. When designing experiments scientists often look to past literature to determine what’s most likely to make them succeed. This literature is vast and unorganized and companies like BenchSci, Causaly and Science.io help researchers more efficiently draw insights from it. There are tools to help with image analysis for researchers (BioDock, PathAI, Paige and Reveal Biosciences), small molecule design and lead optimization. Hardware companies have also built impressive algorithms on top of their devices. This trend of being able to leverage vendors to increase productivity and need to build less capabilities internally should only continue decreasing the barriers to start a new biotech.

Though out of scope for this piece, I’d be remiss not to mention some of the most exciting full-stack companies leveraging advances in all of the above categories to make therapeutics themselves. These AI for drug discovery companies often start selling parts of their innovative approach to other pharma companies to provide upfront liquidity before commercializing their unique insights via joint ventures with other pharma companies. These companies include Atomwise, BigHat, BioAge, Exscienta, Genesis Therapeutics, Insitro, ManifoldBio, Recursion, Terray and others. The process-specific companies have quicker feedback loops to prove they work though the end-to-end startups may ultimately capture more value from their insights.

Questions For the Space and What’s Next

1. How specific to therapeutic modalities will tools be?

Cell and gene therapy R&D is quite different than general large molecule R&D which is substantially different than small molecule development. Product-led growth generally works well in biotech as researchers and academics have large autonomy over the tools they use. Will teams working on all of these use the same workflow tools and infrastructure providers? Or will software and infrastructure separate based on the specific needs of that modality?

2. How big is the opportunity outside pharma/biotech?

Synthetic biology clearly has exciting applications in agriculture, manufacturing, material sciences, food production etc. But the absolute size of that opportunity remains unclear. The growth of markets outside life sciences would be a huge tailwind for software/infrastructure companies in the space.

3. How many big software companies can be created in the space?

To date, the most valuable biotech software companies have been core systems of record driving workflows (Veeva has a market cap over $25B and Benchling was last valued over $6B). A similar workflow opportunity seems to exist in drug manufacturing, where companies currently use legacy LIMS and Manufacturing Execution System (MES) software. For software companies building within R&D, it’s unclear if surfacing insights alone is enough for a standalone company. These companies may need to use things like experiment recommendations as a wedge to own more workflow.

4. What will the biotech R&D team of the future look like? How much will be done in-house?

Companies providing AI for discovery processes have an interesting choice. They can build tools to be used by researchers within a biotech company. Or they can outsource an entire process and come back with optimized outputs. A similar question exists for end-to-end AI for drug discovery companies. They can sell parts of the drug development process they’re best at to big pharma or do the entire end-to-end development themselves. What different kinds of research capabilities will biotechs decide to keep in house and which path will prove more lucrative for these companies? One could imagine a future in which biotechs rely on a set of horizontal players optimized for each individual step of drug development. Regardless, it does seem like biotech as a whole is headed toward a relative unbundling which could make it easier to start these companies.

5. If more and more parts of what R&D teams do is outsourced to other vendors, what will ensure collaboration and coordination of all these activities?

Many of the tools we’ve discussed are designed to coordinate activity within a single company’s research organization. But as more and more of R&D is outsourced, tools to coordinate across these vendors will be crucial and have interesting network effects.

There’s clearly incredible momentum in this space today and I’m excited to continue diving in and seeing how this all plays out.

A huge thanks to Archit Sheth-Shah, Dave Light, Sanjay Saraf, Ashoka Rajendra, Shaq Vayda and Nisarg Patel for their helpful feedback as well as Elliot Hershberg and the Benchling, LatchBio, Bessemer and a16z teams for their previous content which was quite helpful in writing this piece.

Great read Jacob! Curious what market forces you see as drivers behind biotech unbundling? As a two-part Q, what role (if any) do CROs play in trying to aggregate vs pick off services from pharma companies? (and what services do you think are most ripe for aggregation or specialization?)

Sapio Sciences not listed here in either LIMS or ELN spaces? How much research did you really do on the players here? Or just picked from ones your firm invests in?